SEO has become the cornerstone of content creation and if it’s done correctly, you will see a steady increase in your website’s traffic. However, some small businesses still struggle to get their SEO to work for them. If you’re not seeing growth in site traffic or an improvement in your page rankings, there could be a problem with the links or keywords you’re using.

The principles of technical Search Engine Optimisation have never been more important because Google will continue updating its algorithms. Therefore, your previous SEO strategy may not be as effective anymore. To help you maximise your SEO so you can gain more organic traffic to your site, we’ve provided the ultimate technical SEO checklist.

Keep reading to find out more about Google’s Page Experience update, how to look for crawl errors and how to fix broken links.

Technical SEO Checklist

1. Update Your Page Experience

In mid-June 2021 Google will be updating its Page Experience signal which is a combination of site performance metrics. The new update will affect how websites are ranked in Google’s SERPs. The new Google algorithm will determine your site’s user experience based on the following:

- Safe browsing: Your site mustn’t contain deceptive content, malware or any form of social engineering. Google’s algorithms will find them unsafe for users and it can decrease your page ranking.

- Intrusive interstitials: An intrusive interstitial is a pop-up ad on a website that tends to block information on a site. Some sites have more than one pop-up ad which can be disruptive and cause a negative user experience for mobile and desktop users.

- HTTPS security: HTTPS protects the privacy and security of your users. It provides authentication and confidentiality. Ensure you have HTTPS on your website so that Google knows it’s a trustworthy site.

- Mobile-friendliness: A site’s ranking may be affected if it doesn’t translate well to mobile devices. Make your website mobile compatible so site visitors can have a positive user experience browsing through your site on their Smartphones.

The new features that will be updated are Google’s Core Web Vitals which is the browser’s measurable signals. These measurable signals determine whether a page will provide a positive or negative user experience.

To ensure your site is providing a positive user experience you must measure your website’s Page Experience using Google’s Search Console tool. The tool will identify pages on your site that need improvement. For more information about how to prepare your website for this update, get in touch with the Digital Insider team or request a strategy.

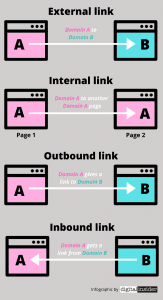

2. Fix Inbound and Outbound Links

Broken internal and external links can cause a poor user experience for search engines and your site visitors. It’s frustrating for your visitors to click on a link that doesn’t transfer them to a page they want to visit. Check the following to ensure your links are working correctly:

- Orphaned pages

- Links that direct visitors to an error page

Fix your broken links by updating the anchor text’s URL or consider removing the link if internal pages or sites don’t exist anymore.

3. Integrate an XML Sitemap

An XML sitemap helps search engines determine what content to index in SERPs. The XML sitemap should include 200 status URLs and no more than 500 URLs. If you have more URLs, you should have several XML sitemaps to increase your crawl budget.

You should avoid having URLs with parameters, duplicated content or URLs with error codes in your XML sitemap. Use Google Search Console to check for index errors with your XML sitemap.

4. Develop a Robots.txt File

A robots.txt file lets search engines know which sites they can and can’t access to index. If you want to prevent search engines from crawling your pages, then you must integrate a robots.txt file into your website. On the other hand, you want to make sure your robots.txt file is blocking pages that must not be indexed such as the following:

- Admin pages

- Temporary files

- Cart or checkout pages

- URLs with parameters

Check whether your site has a robots.txt file by going to https://www.domain.com/robots.txt. If you don’t have a robots.txt file you can create one. Include your XML sitemap into your robots.txt file and use Google’s robots.txt file tester to check that it’s working.

5. Fix Google Search Console Crawl Errors

Crawl errors mean that Google is having difficulty viewing the content on your website. If Search Engines can’t see your content then your webpage can’t be ranked. Find crawl errors on your website through Google Search Console to fix them.

To fix crawl errors simply visit the Coverage Report on Google Search Console. Here you will see errors, excluded pages and pages that have warnings. Scan the report and fix DNS and site errors using the Fetch as Google tool on the platform.

The Fetch as Google tool allows you to see how Googlebot crawls your page. Take time to resolve any errors and find out why there are excluded URLs. Using the Google Search Console you can resolve 404 errors as well as incorrect canonical pages.

6. Page Loading Speed

Slow loading sites can be extremely frustrating to site visitors. People will often leave a site that takes more than a few seconds to load and will not return. The ideal website load time is between one and two seconds.

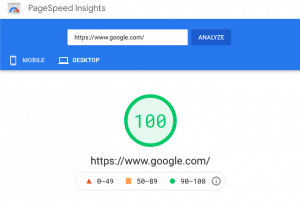

Due to the new Google update to page experience you want to make sure that your site loads at a decent speed. Maintain your website’s speed when you run a crawl. You can also check your page’s loading speed using Google’s PageSpeed Insights tool.

To fix your website’s loading speed check and fix the following:

- Use compression such as Gzip to reduce the size of JavaScript, HTML and CSS files

- Remove render-blocking JavaScript

- Optimise on-page images

- Improve server response time

- Reduce page redirects

7. Fix Duplicate Content Issues

Duplicated content can be caused by replicated pages or copied content. Find and fix duplicated content issues such as the following:

- Meta descriptions: When writing enticing meta descriptions for each piece of content you publish they must all be unique. Use Google Search Console to find and fix duplicated meta descriptions and ensure they all contain a keyword that’s relevant to your content.

- Duplicated versions of your site: Google must be able to index at least one version of your site. You must implement no index or canonical tags on duplicated pages. Set up 301 redirects to the primary version of your URL.

- Title tags: Optimising your title tags is the basics of SEO and it’s the easiest task to do. Title tags inform search engines what your content is all about. You should never have any duplicated title tags on your site. Use Google’s Search Console to help you find duplicated title tags so you can fix them and make them unique.

8. Crawlability

Crawlability is the search engine’s ability to access and crawl your content. If you have broken links or dead ends on your website it can affect your site’s crawlability. Other factors that affect Crawlability are poor site structure, broken page redirects and server errors. To improve Crawlability execute the following:

- Maximise your crawl budget

- Set a URL structure

- Optimise your site’s architecture

- Use robots.txt files

- Create an XML site map

- Add breadcrumb menus

Final Thoughts

Do you need assistance with your technical search engine optimisation? Then let Digital Insider assist you with a strategy to get your website to rank naturally in SERPs. We can assist you with updating your outdated SEO so that you can continue growing your site and increase your click-through and conversion rates.

Connect with us by visiting www.digitalinsider.com.au and request your strategy today!